Extract – Transform – Load

Our team of ETL experts take a more programmatic approach towards ETL. Our engineers are well experienced with platforms like Azure SQL SSIS, Informatica, IBM Datastage, Cognos, AbInitio.

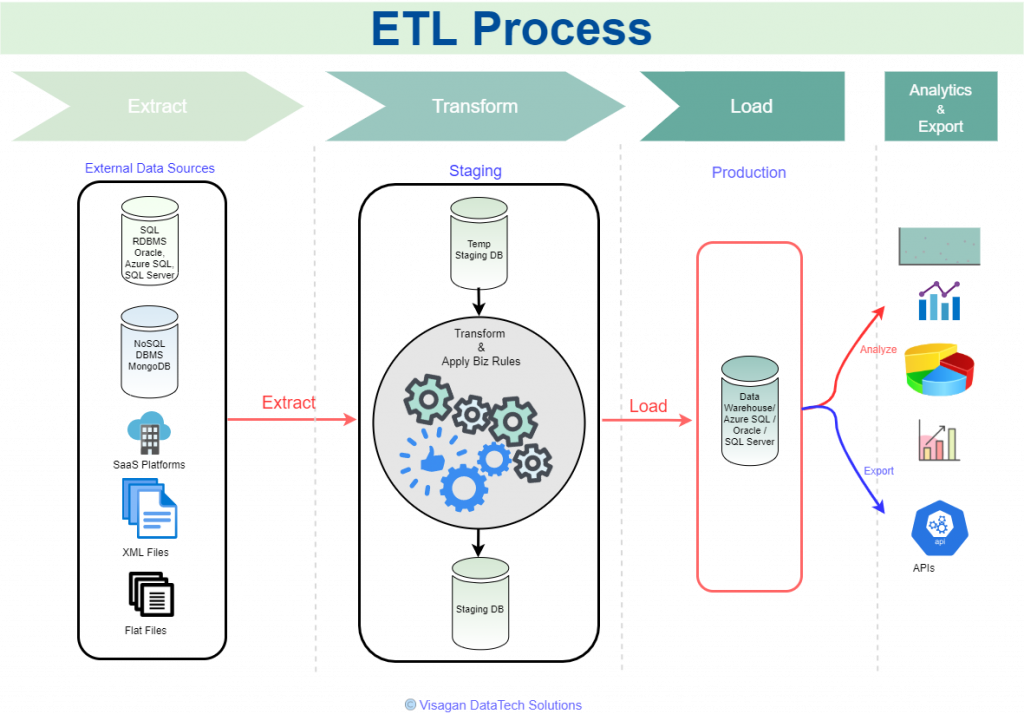

VDS’ has a team of ETL experts who are well experienced in the data integration process referring to three distinct but interrelated steps (Extract, Transform and Load) used to synthesize data from multiple sources many times to build a Data Warehouse, Data Hub, or Data Lake. Our team clearly understands that it is essential to properly format and prepare data in order to load it into the data storage system of your choice. The triple combination of ETL provides crucial functions that are many times combined into a single application or suite of tools that help in the following areas:

- Offer deep historical context for business.

- Enhances Business Intelligence solutions for decision-making.

- Enable context and data aggregations so that businesses can generate higher revenue and/or save money.

- Enable a common data repository.

- Allow verification of data transformation, aggregation, and calculation rules.

- Allow sample data comparison between source and target system.

- Help to improve productivity as it codifies and reuses without additional technical skills.

VDS specializes in end-to-end data ingestion including:

- Data Extraction

- Data Cleansing

- Transformation

- Load

A viable approach would be adopted to match with your organization’s needs and business requirements but also perform on all the above stages.

Further, VDS traverses the Four Stages of ETL exploring and employing the below strategies:

- Know and understand your data source — where you need to extract data

- Databases (Oracle, Azure SQL, SQL Server, etc.,)

- Flat Files

- Web Services

- Other Sources such as RSS Feeds

- Audit your data source

- Assess the data quality and utility for a specific purpose.

- Look at key metrics, other than quantity,

- Arrive at a conclusion about the properties of the data set.

- Study your approach for optimal data extraction

- Design the extraction process to avoid adverse effects on the source system in terms of performance, response time, and locking.

- Push Notification: if the source system is able to provide a notification that the records have been modified and provide the details of changes.

- Incremental/Full Extract: when external systems may not provide the push notification service but may be able to provide the detail of updated records and provide an extract of such records.

- Choose a suitable cleansing mechanism according to the extracted data

- Data cleaning, cleansing, and scrubbing approaches deal with the detection and separation of invalid, duplicate, or inconsistent data to improve the quality and utility of data that is extracted before it is transferred to a target database or Data Warehouse.

- Data cleansing involves verifying for Uniqueness, Misspelling Redundancy/Duplicates, Outside domain range, Data entry errors, Referential integrity, Contradictory values, Naming conflicts at the schema level, Structural conflicts, Inconsistent aggregating, Inconsistent timing, and more per your organization’s requirements.

- Once the source data has been cleansed, perform the required transformations accordingly,

VDS practices the following during data transformations:- Format Standardization

- Cleaning

- Deduplication

- Constraints Implementation

- Decoding of Fields

- Merging of Information

- Splitting single fields

- Calculated and Derived Values

- Summarization

- Know and understand your end destination for the data — where is it going to ultimately reside.

- Load the data:

VDS would effectively implement taking into account Ordering, Schema Evaluation, Monitoring Capability.